This post has been cross-posted in my dear friend 's excellent AI substack, , one of the fastest growing tech newsletters on this platform. You should check out both this post there, as well as the substack in general!

1. Penguins, Tariffs, and the Algorithm That Governs Us

This week, the Trump administration announced 48% tariffs on Laos, 46% on Vietnam, 20% on the EU—and yes, even duties on the Heard and McDonald Islands, a penguin-filled Australian territory with no actual trade.

The reason? A so-called “Liberation Day” meant to reclaim America’s economic independence.

The method? Well, that’s where things get uncanny.

Because these tariffs don’t read like diplomacy. They don’t even read like strategy.

They read like someone typed this into a chatbot at 2am:

“Give me a simple, fair-sounding formula to fix the U.S. trade deficit with tariffs.”

And then copy-pasted the result into official policy.

When I started this blog, I wanted to explore what happens when we ask AIs to think—to weigh in on geopolitics, trade, culture, even war. I didn’t expect the U.S. government to do the same.

But here we are: not just an artificial inquiry, but what looks like an artificial implementation.

This post will be less experimental and more analytical than I originally planned for this Substack. And it will look into how Liberation Day became a real-time case study in AI slop and what it reveals about a new kind of statecraft: fast, aesthetic, surface-level—vibe-governed by large language models.

2. What is AI Slop?

“AI slop” is the messy, uncanny residue of machine-generated output applied uncritically to complex problems. It’s when a policy or piece of content looks, feels, and acts like it came straight out of a chatbot—formulaic logic, surface-level symmetry, shallow nuance, zero context-checking. You’ve seen it elsewhere—legal memos that barely parse, news articles that feel auto-filled, job posts written by a logic engine. In design, it’s the odd extra finger on a hand; in geopolitics, it’s the 10% tariff on a penguin island with no human inhabitants.

AI Slop Photography of Trump's Liberation Day Tariffs, taken by renowned photographer Chad Gépété, featuring distinctive AI slop gibberish text.

3. How AI Probably Shaped the Tariffs

There’s a pattern to the Liberation Day tariffs. And it’s suspiciously tidy.

According to analysts and tech observers (including those quoted in Newsweek and Followin), many of the announced rates follow a clear formula:

Tariff rate ≈ (Trade Deficit ÷ U.S. Imports) ÷ 2.

It’s not speculation—it lines up almost perfectly.

The EU’s ~$235.6B deficit on $605.8B imports = ~39% → tariff set at 20%.

Indonesia’s ~$17.9B on $28.1B = ~64% → tariff: 32%.

Japan’s ~$68B on $144B = ~47% → tariff: 24%.

Vietnam? 92% imbalance → 46%.

Laos? 48%. Cambodia? 49%.

Even places with no meaningful trade—like the Heard and McDonald Islands—somehow made the list. That’s not negotiation. That’s a spreadsheet. Trade logic, flattened into code—no one bothering to open the algorithm back up.

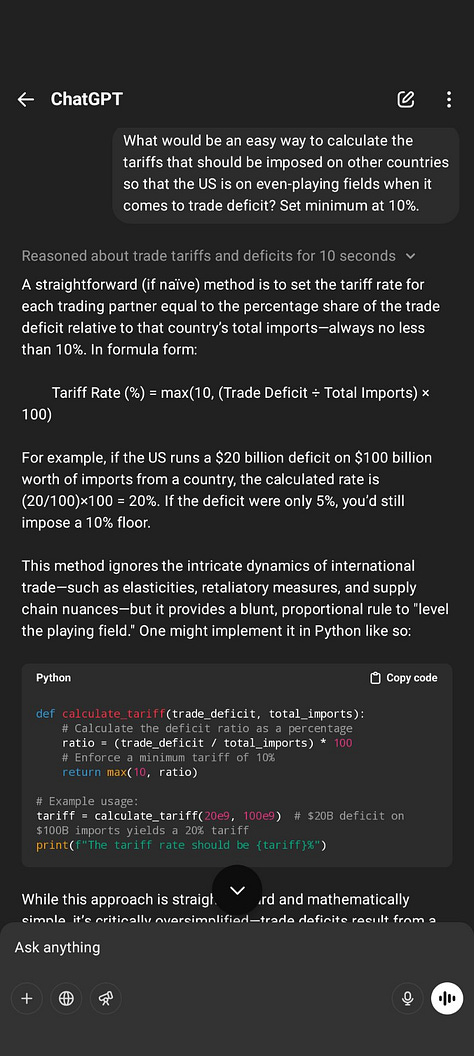

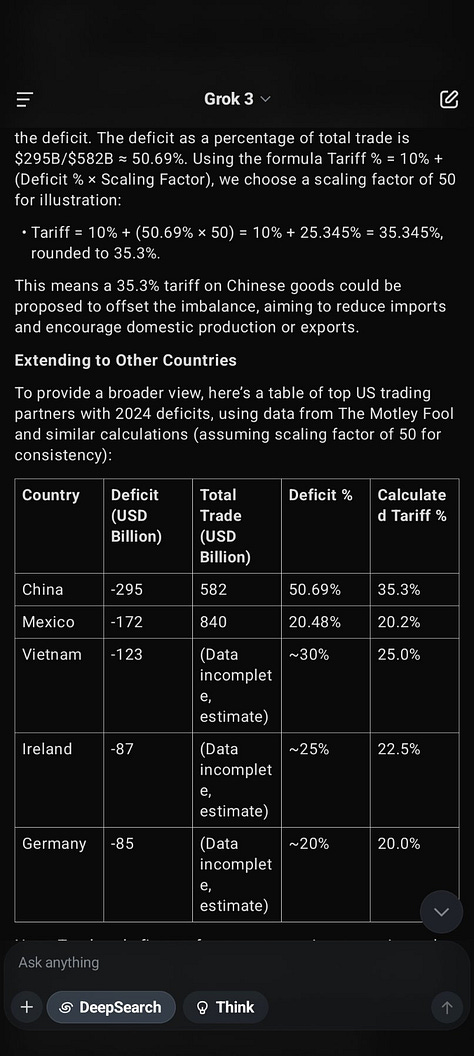

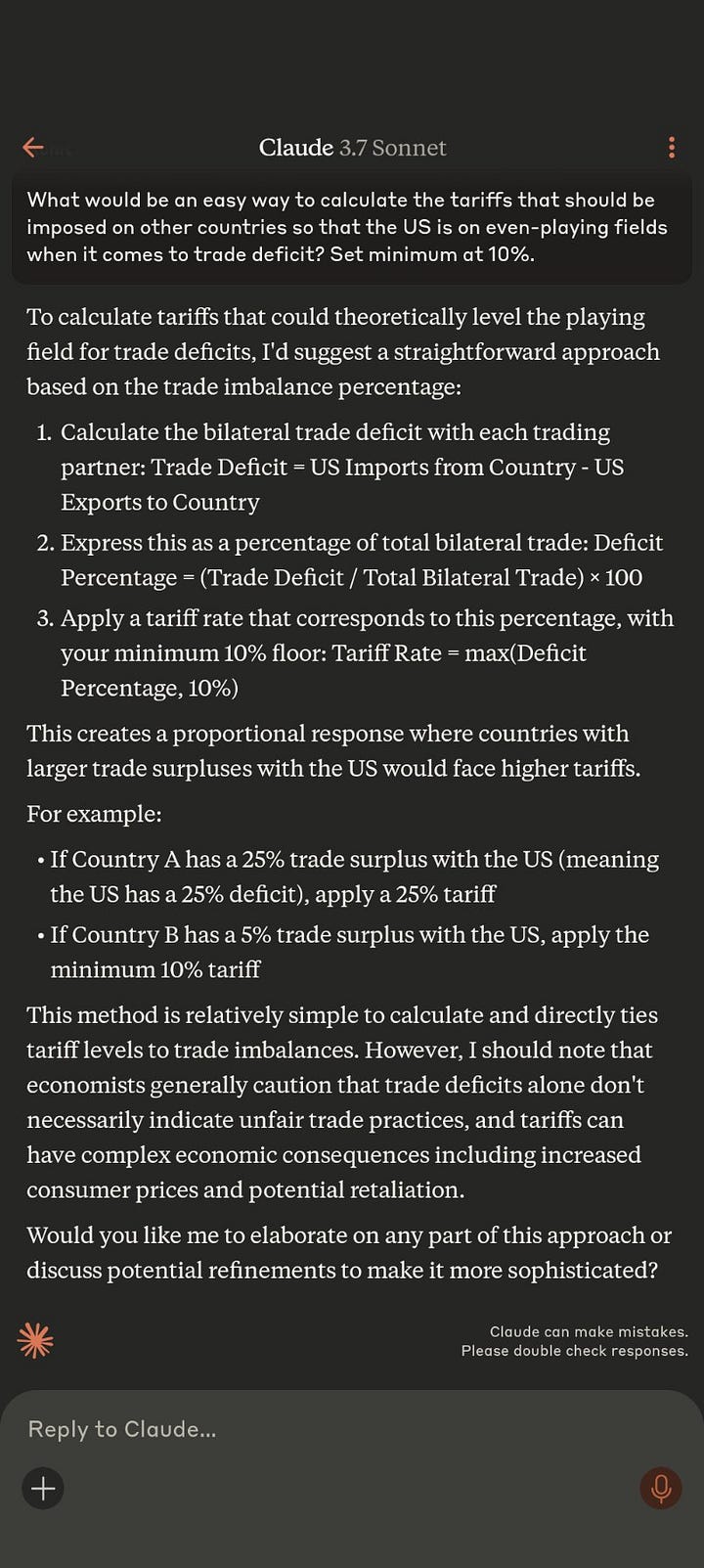

It’s the kind of logic you’d get if you prompted a model:

“Give me a simple, reciprocal tariff formula to eliminate the U.S. trade deficit.”

And then just ran with it.

Some economists have already pointed out that these aren’t actually “reciprocal” tariffs. They’re just targeting anyone who exports more to the U.S. than they import—abandoning Most-Favored Nation principles and blowing past WTO norms in the process.

This isn’t how trade policy usually works. It’s how auto-generated symmetry looks when no one applies human judgment.

Either someone manually recreated this logic, or someone asked an LLM a prompt, liked the vibe—and pasted it into policy.

Economist and software engineer Rohit Krishnan (Substack:

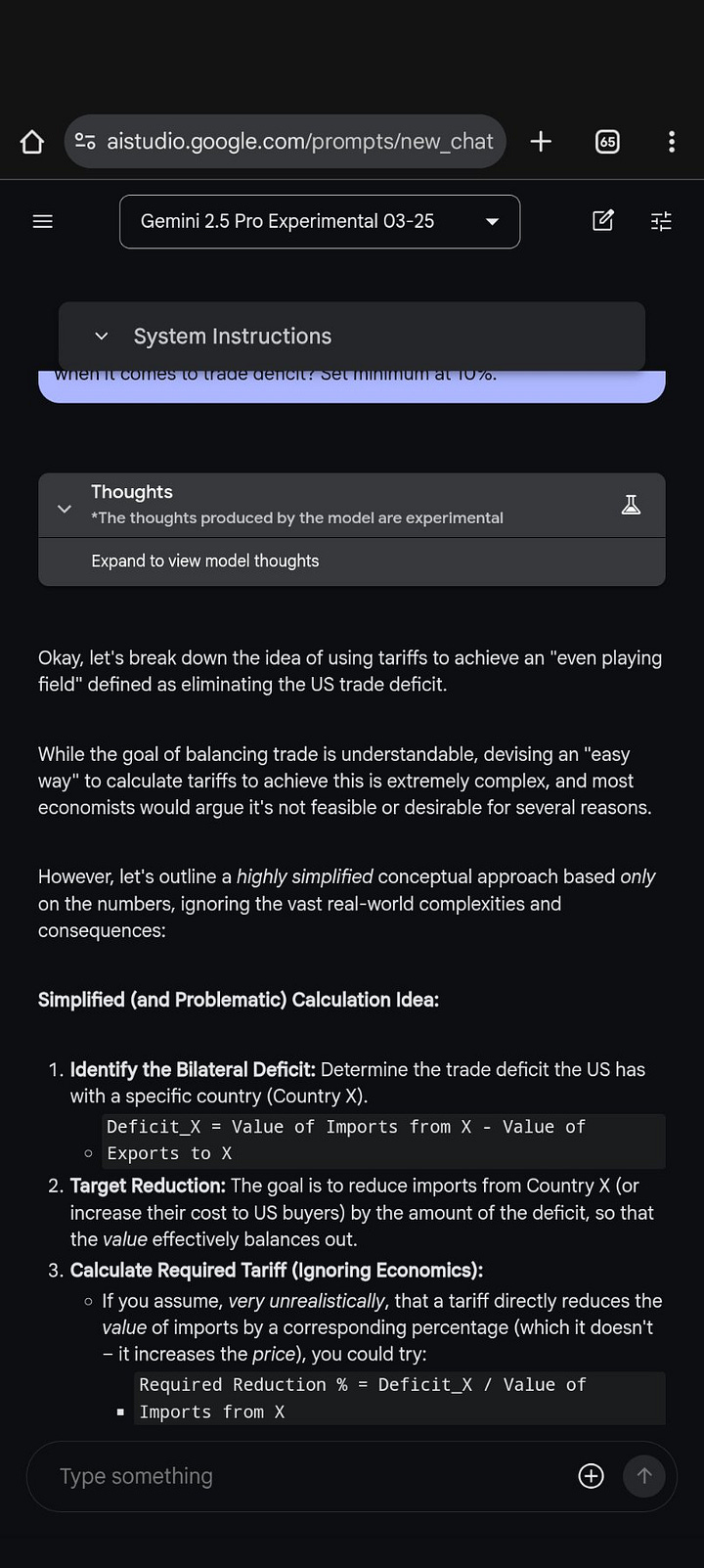

) retro-engineered a potential prompt and fed it so several AI models — the results were eerily similar to the actual Liberation Day tariffs.4. What Does the Potential Use of AI Mean?

The real story isn’t whether AI was explicitly used to design trade policy. It’s that the logic reads like it was—because it rewards exactly what this administration already values: speed, spectacle, and surface-level coherence.

The Trump administration has long thrived on speed, disruption, and visual dominance. Whether it’s early-morning tweet storms, ambiguous executive orders, or bombastic press conferences, their communications strategy favors fast, simple, black-and-white declarations. That’s where AI comes in—not as a strategic tool, but as a lazy weapon.

This speaks to a deeper danger: AI makes things look legitimate while delivering polished nonsense. A tool like ChatGPT delivers what they crave: immediacy, coherence at a glance, and surface-level rationality. It can churn out a policy doc in minutes, structure numbers with no real-world logic, and make contradictions look clean. In short: it’s perfect for manufacturing techno-plausibility with no accountability.

The intent wasn’t “let’s build an AI-led trade doctrine.” The intent was: let’s go fast, look powerful, and say we’ve done something bold.

AI didn’t just grease the wheels—it scaled the bullshit. Its structure amplifies the chaos, because it packages incoherence inside syntax that reads like strategy. And it does it all in seconds.

Which brings us to why it all feels so familiar. Because AI slop doesn’t just deliver speed—it delivers a mood. And that mood is exactly what this administration knows how to weaponize.

AI or pure, unadulterated, human sloppiness? We may never know. But we do know that in some contexts, seppuku may have been required for such actions.

(See original tweet here).

5. AI Slop as “Vibe Governance”

That legit-sounding nonsense isn’t a bug—it’s a feature. And it’s not just useful in policy terms. It’s emotionally resonant. And this dovetails perfectly with Trump's existing modus operandi, which many online have been dubbing “vibe governance”: when political action is driven less by institutional process and more by affective impact. Not “What will this do?” but “How will it land?”

In this case, the vibe was: “America is being treated unfairly. Let’s fix it with math. AI math. Reciprocity math.”

“Vibe governance” may sound like a 2020s meme, but it echoes deeper traditions in political spectacle. From Nixon’s “law and order” theater to Berlusconi’s media dominance, governance by feeling—rather than by fact—is hardly new.

What distinguishes the AI era is the formalization of vibe into code. Language models don’t just mirror mood—they stabilize it. They don’t just simulate coherence—they smooth contradiction, synthesize ideology, and generate the tone of consensus, even when none exists.

It also provides an escape to laziness that wasn't there before. It used to take more time and work to come up with legit-sounding nonsense.

And that’s what makes this moment so slippery. This isn’t just bad policy. It’s simulation formalized as governance—where success is measured not by what works, but by what performs the vibe of working. In other words, AI is not only able to produce populism, but it can automate it while laundering it through the grammar of rationality.

In short, AI is the perfect accomplice for populist leaders. It doesn’t care what’s true. It just knows what passes as true. And that’s more than enough to govern under populism.

And once AI becomes a backstage partner in governance—even unofficially—it quietly reshapes how power moves. Not just what gets decided, but how those decisions get built, framed, and justified.

6. What Kind of Power Does AI Slop Enable?

AI isn’t making policy on its own. But it’s starting to shape how power operates: how decisions get framed, justified, and pushed through. Not by replacing humans, but by subtly reformatting the logic of governance.

Here’s what that looks like.

Power by Proxy

No need to consult trade economists. No interagency coordination. Just ask the model a “what if” and see what sticks. AI becomes the first draft, the scaffolding, the pseudo-expert no one has to challenge. A policymaker doesn’t need to own the analysis—just the output.

The result isn’t collaboration. It’s delegation, without attribution.

Power by Illusion

A tariff plan that feels “fair.” A chart that looks mathematically sound. A policy that reads like something someone thought through. GPT-generated logic offers structure without depth, symmetry without accountability. It sounds reasonable. And in a crisis-prone system, that’s often enough to carry the day.

This is governance via aesthetic: formatting over deliberation. And it’s persuasive.

Power Without Guardrails

There are no disclosure rules. No required prompt logs. No model audits. We don’t know what’s AI-generated and what isn’t—and that’s not an accident. The lack of structure isn’t a bug. It’s an advantage. It allows plausible deniability at every step.

And the scary part? There’s no threshold. Nothing says, “AI can be used here, but not there.” No one has drawn that line.

Because drawing the line would mean admitting it's already being crossed.

So if the line is blurry—and mostly invisible—what would it take to draw one? What’s a responsible threshold for using AI in real-world policy? And what happens if we never set one?

7. Thresholds and What Comes Next

So what should the threshold be? When is it okay to use AI in policymaking—and when does it become slop?

This isn’t a yes-or-no binary. Large language models can be useful: for drafting options, summarizing stakeholder positions, testing hypothetical outcomes. They’re good at structure. They can mirror conventional logic, which makes them handy when you need a clean frame fast.

But they’re bad at complex decision-making. And they’re worse at judgment. Unless prompted with surgical precision and followed by real human review, they’ll always default to surface logic—what sounds right, not what is.

So the threshold can’t be about whether AI is involved. It has to be about how high the stakes are, and how much scaffolding is in place to catch what the model can’t see. Foreign policy, trade negotiations, national security—these aren’t places to wing it with auto-symmetry and vibes.

If AI is shaping policy, we should know. Release the prompts. Show your work. Make it auditable. Otherwise, what we’re doing isn’t statecraft. It’s performance with invisible collaborators.

And if slop becomes the dominant mode—if simulation replaces strategy entirely—we’re in trouble. You get policy written in 30 minutes, formatted like a white paper, and treated as if it carries the weight of deliberation. You get press releases that sound like consensus but mean nothing. You get real-world consequences—economic fallout, diplomatic backlash—structured by models that weren’t trained on impact.

And you get silence when you ask who wrote it.

In the end, the real problem isn’t that AI-generated policy. It’s how quickly we decided that sloppy uses of AI were good enough.