The Data Center Reckoning: Power, Water, and Who Really Pays

How the race to build AI infrastructure is straining power grids, draining aquifers, and leaving communities holding the bag

You’ve probably been hearing about data centers everywhere lately. Stories about them growing at ridiculous speeds. Communities getting angry about their environmental impact. Electricity bills going up. Water shortages. Towns fighting to keep them out.

There are real issues here—but also a lot of confusion about what’s actually happening. So I looked into it, trying to translate for myself and anyone else wondering: What exactly are these things? Why are they suddenly such a big deal? Why are people so upset about them? And what, if anything, can actually be done?

This is what I found.

I. The Basics of Data Centers and AI

A. What are Data Centers?

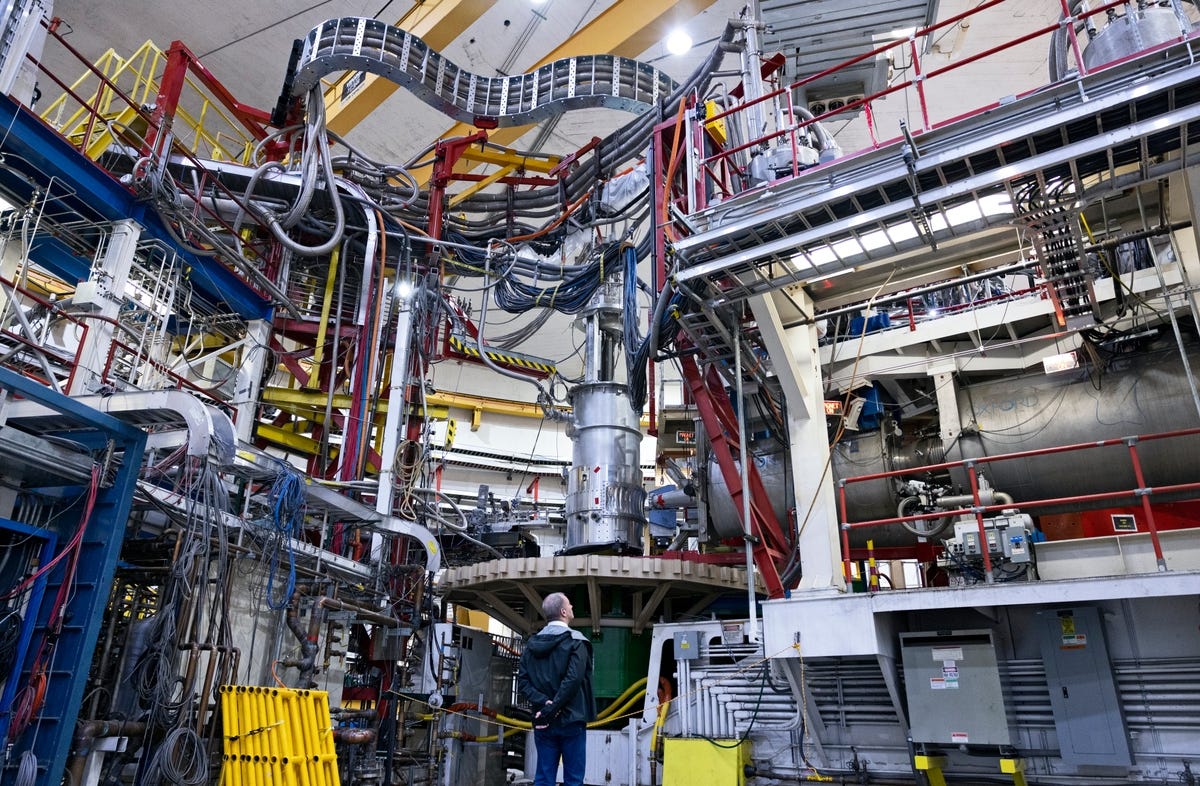

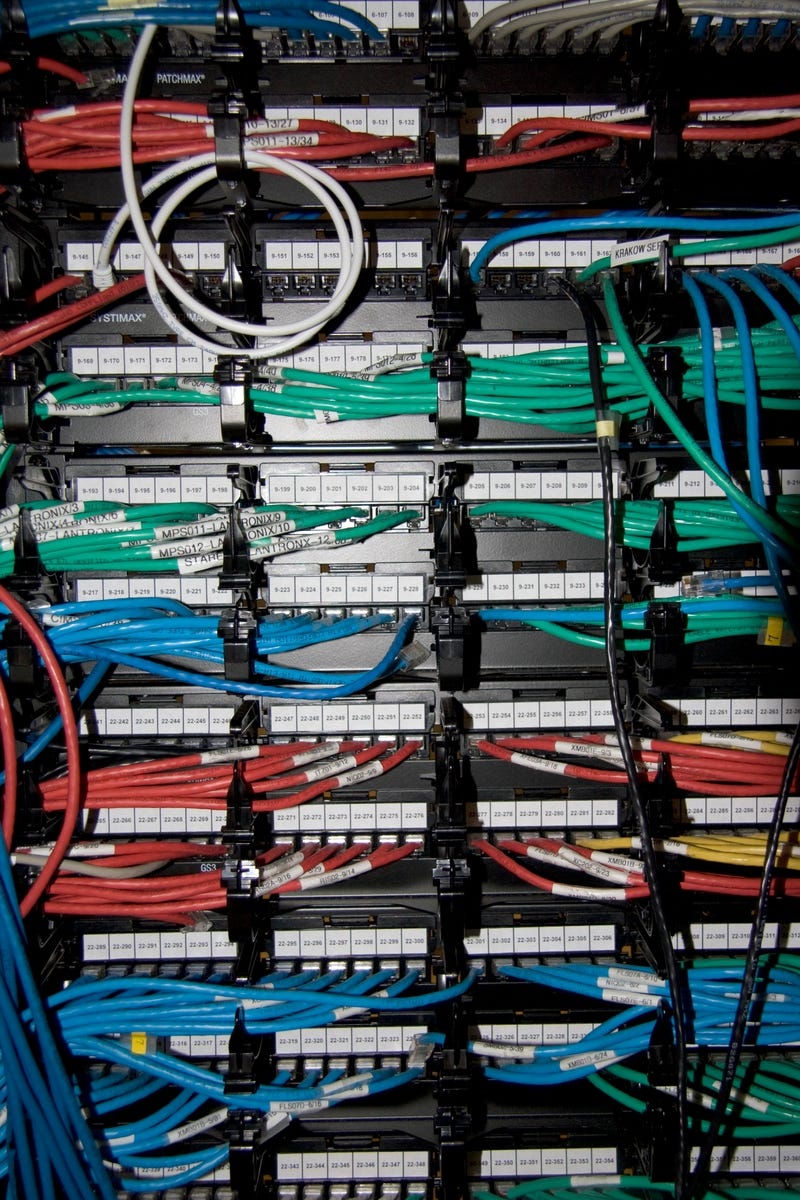

When you use ChatGPT, watch Netflix, or check your email, you’re not just using your phone or laptop. You’re connecting to a data center—a building (or campus of buildings) packed with thousands of computer servers doing the actual work.

The cloud isn’t a cloud. It’s buildings full of computers.

Here’s why they exist: If you had to run ChatGPT on your own device, you’d need dramatically more powerful—and expensive—hardware at home. Your laptop would need to be as powerful as a small data center. Most people would simply go without these services entirely. Centralizing all that computing power into specialized facilities makes sense. It’s more efficient, more secure, and professionally managed.

Data center hardware spending hit $280 billion in 2024, up 34% from just one year earlier. In the first half of 2024 alone, the largest data centers in major U.S. markets grew by 24%. Blackstone and Digital Realty launched a $7 billion project. One company is planning five campuses in Texas that together would use as much electricity as 4.5 million homes. Meta is building a $10 billion facility in Louisiana.

The companies building the biggest facilities are called hyperscalers—tech giants like Amazon, Microsoft, and Google. These three alone own over half of the world’s largest data centers.

B. How AI is Different

Data centers have existed for decades. What changed is AI.

Traditional data centers handled things like storing files, running websites, and streaming videos. AI is fundamentally different. It requires massive computing power running constantly. When you ask ChatGPT a question, it’s not just pulling up stored information like Google search. It’s performing billions of calculations in real-time using specialized chips running at maximum capacity.

In short, a regular computer chip might use 150-200 watts of power. AI chips use 700-1,200 watts each. Traditional data center equipment racks use 7-10 kilowatts of power. AI racks can use 30-100 kilowatts. The newest systems use about 120 kilowatts per rack—that’s like running 80 window AC units in a single equipment cabinet. A large AI training facility can use 500 megawatts continuously—enough to power about 400,000 homes.

C. Why is AI so Different?

Two things happen with AI that eat up energy:

Training is when companies build a new AI model—imagine teaching a system to understand language by showing it essentially the entire internet. This requires thousands of powerful computer chips running continuously for weeks or months. Training GPT-3 used enough electricity to power about 1,000 average U.S. homes for a year. Training GPT-4 used roughly 40 times more.

Inference is when you actually use the AI—every time you ask ChatGPT a question or generate an image. Each query uses less power than training, but it happens billions of times per day, constantly, from users worldwide. Early estimates suggested one ChatGPT query uses about 10 times more electricity than a regular Google search, though other analyses contest this figure.

The problem: we don’t actually know the real numbers. Companies treat energy and water consumption as trade secrets, so nearly all figures are estimates. This black box makes it nearly impossible for communities to understand the true impact.

What we do know: AI workloads are projected to make up 70% of all data center demand by 2030—meaning most of this infrastructure build-out is driven specifically by the AI boom, not general cloud services.

II. The Resource Problem: Power, Emissions, and Water

A. The Grid Wasn’t Built for This

Data centers used about 1-1.5% of global electricity in 2024. That could reach low single digits by 2030, with much faster growth in regions where AI facilities cluster. In the U.S., data centers are expected to reach 6% of total electricity consumption by 2026, growing about 12% every year through 2030.

Take Northern Virginia—the highest concentration of data centers anywhere in America. The local utility says power demand will grow 85% over fifteen years, with data centers quadrupling their electricity use.

The existing electrical infrastructure simply wasn’t designed for this. When a company wants to connect a massive new data center, they join what’s called an interconnection queue—basically a waitlist to hook up to the power grid. In places like Virginia, that wait is now 4-7 years. The grid operator covering 13 states plus Washington D.C. saw its electricity prices jump more than 10 times higher for 2026-2027, partly due to data center growth.

In a survey, 72% of data center companies said their biggest challenge is getting enough power.

B. The Fossil Fuel Comeback

Here’s where things get uncomfortable. Elon Musk’s xAI is running dozens of gas turbines at its Memphis facility—without proper permits, according to multiple investigations and lawsuits. Meta’s Louisiana facility is powered by three new gas plants providing 2,200 megawatts—enough for about 1.8 million homes.

The pattern is clear: we can’t build clean energy fast enough, so fossil fuels are making a comeback. Southern Company reversed plans to close coal plants. Coal generation increased nearly 20% in 2024. Analysts estimate 60% of new power demand through 2030 will come from natural gas, with 99 gigawatts of new gas-fired capacity planned across 38 states.

Natural gas produces about half the CO₂ of coal, but there’s a timing risk: gas prices are near multi-year lows right now. When they spike back—as they historically do—operating costs for these facilities will jump dramatically. And the cumulative impact? A 2024 Morgan Stanley report projects data centers will emit 2.5 billion tonnes of greenhouse gases worldwide by 2030—roughly triple what would have happened without the AI boom.

C. The Water Crisis Nobody’s Talking About

Data centers have a water crisis that’s been overshadowed by the energy debate. A single large facility can consume up to 5 million gallons per day for cooling—roughly the usage of a town of 50,000 people. Training GPT-3 alone required over 700 kiloliters of water.

And that’s just direct consumption. The bigger impact is indirect: the water used to generate the electricity that powers data centers. Berkeley Lab estimated this indirect water footprint at 800 billion liters in 2023—water that’s either evaporated during power generation or returned to waterways at higher temperatures.

In water-stressed regions like Arizona (which experienced drought across 99% of its territory in 2021), this isn’t abstract. It’s why Tucson passed strict “mega-user” water rules requiring conservation plans after a proposed data center project raised alarms. When your aquifer is already running dry, watching millions of gallons per day cool computer chips feels less like economic development and more like theft.

III. The Systemic Problems: Economics, Infrastructure, and Accountability

The resource crisis is just the surface. Three deeper problems make this fundamentally unsustainable.

A. The Economics Don’t Add Up Yet

Here’s the uncomfortable question nobody wants to ask: is the money actually there?

The AI boom is built on a bet that future revenues will justify today’s massive infrastructure spending. OpenAI lost $5 billion in 2024 on $3.7 billion in revenue. The hyperscalers (Amazon, Microsoft, Google) are profitable, but their “AI revenue” often means existing cloud customers staying because of AI features—not new AI-driven income.

The bet is that by 2030, AI revenues will justify today’s spending. But if that doesn’t happen? Data center AI chips have an operational lifespan of just three years—compared to 5-7 years for traditional servers—creating a relentless upgrade cycle. Communities would be stuck with expensive electrical infrastructure built for facilities that shut down or relocate. The costs—grid upgrades, subsidized power, water infrastructure—remain. The benefits vanish. It’s the kind of stranded asset problem that haunted communities after previous tech bubbles burst.

B. The Physical World Isn’t Cooperating

Even if the money were there, the physical world isn’t cooperating. Two-thirds of companies cite supply chain shortages for critical grid components like transformers and circuit breakers, many of which come from China. But the labor shortage is even worse. 63% of companies say finding skilled workers is a major challenge. The U.S. Bureau of Labor Statistics projects over 81,000 openings for electricians every year this decade, while 30% of current union electricians are approaching retirement.

You can’t prompt-engineer your way around transformer lead times or a shortage of licensed electricians.

C. Nobody’s Accountable

Companies don’t have to disclose how much energy or water they actually use. We’re making 30-year infrastructure bets based on 3-year technology cycles, with no plan for what happens if the AI boom fizzles. Most communities only get input after projects are announced and deals are struck—when changing course is nearly impossible. Tax breaks are negotiated behind closed doors. Job numbers don’t distinguish local hires from imported specialists. Grid upgrade costs are buried in utility rate cases. By the time anyone can assess whether a data center was actually good for a community, it’s years too late.

Researchers have proposed that AI papers include energy and carbon calculations—that should be mandatory for commercial deployments too. A proposed Clean Cloud Act would create disclosure requirements, but it’s just a proposal.

IV. When the Promise Meets Reality: The Community Cost

Given all these problems, who actually bears the cost? Communities who were sold a different story.

A. The Compelling Pitch

To understand why cities rolled out the welcome mat, look at the numbers. A 2021 PwC report showed each direct data center job supports six more through supply chains and local spending. The industry contributed nearly $500 billion to U.S. GDP and almost $100 billion in tax revenue to federal, state, and local governments. Arizona alone saw $863 million in state and local tax benefits in 2023.

For cash-strapped municipalities, these were compelling promises—until residents discovered what came with them.

B. The Hidden Costs

Here’s what’s really happening: communities are subsidizing the data center boom, often without realizing it.

In Northern Virginia, residential electricity bills are expected to increase by $14-37 per month by 2040 (in inflation-adjusted dollars) due to data center growth. Those grid upgrades to connect massive data centers? In many regions, costs can be spread across all ratepayers through “network upgrade” cost recovery policies—meaning you might be subsidizing Google’s infrastructure through your monthly electric bill, whether you use their services or not.

Many states offer huge tax breaks to attract data centers. Virginia gave over $100 million in sales tax exemptions to data centers in recent years. That’s money that could have funded schools or roads. The jobs they do create are often specialized positions filled by workers brought in from elsewhere, not locals.

But the costs aren’t just financial. Residents near data centers deal with constant noise from industrial cooling systems and backup generators running 24/7. Gas turbines raise air quality concerns in communities already dealing with environmental burdens. Large battery backup systems pose fire risks—the Phoenix Fire Department has highlighted how “thermal runaway” in lithium-ion battery arrays creates fires that are difficult to extinguish and release toxic smoke. And the facilities themselves take up huge amounts of land that could have been housing, parks, or businesses that employ more local people.

C. The Backlash

These accumulated grievances explain why cities are starting to push back. Phoenix held hearings in 2024 on new data center regulations. The city received hundreds of pages of public comments. Industry giants like Google pushed back hard, arguing that requiring utilities to guarantee power within two years was impossible and would halt development.

But Phoenix passed the rules anyway, requiring data centers to get special permits with health and safety conditions.

The pattern is consistent: cities initially welcome data centers for promised jobs and tax revenue. Then residents discover the reality—higher electric bills, water concerns, noise, limited actual employment—and push back. Ireland paused new data center connections near Dublin until 2028 to manage grid stability. A Michigan township tried to block a 250-acre facility and got sued; they settled with restrictions after community outcry.

V. What Comes Next: Solutions, Limitations, and What You Should Know

A. Why Technology Alone Won’t Save Us

The industry is betting efficiency gains will outpace demand growth. But here’s the catch: AI training (the energy-intensive part) is only 10-20% of the workload. The other 80-90% is inference—answering your ChatGPT queries in real-time. You can’t defer that to off-peak hours. The flexibility needed for grid-friendly operations isn’t there.

Tech solutions exist. Liquid cooling can reduce cooling energy by 90%, but retrofitting existing facilities is expensive and slow. Companies are signing deals for nuclear power restarts and small modular reactors, but skepticism persists about whether they’ll deliver on promised timelines. Clean energy solutions face long development cycles, high costs, and regulatory hurdles. Meanwhile, natural gas—cheap, proven, fast to build—is what’s actually getting built.

B. What Actually Doing This Right Would Require

The realistic path forward has three parts.

First, policy: mandate transparency on energy and water use, require environmental assessments before construction, streamline permitting for projects paired with dedicated clean energy—the DOE is already piloting this on federal lands.

Second, technology: enhanced geothermal for 24/7 baseload power, advanced cooling systems—the tech exists, just isn’t deployed fast enough.

Third, the catch: this requires collaboration between tech companies, energy developers, utilities, and communities. It means different incentive structures. Some operators are exploring “shared energy economy” models where data center backup power supports grid reliability for everyone. The pieces exist. What’s missing is the political will to change the incentives.

C. What This Means for AI Timelines

Here’s what doesn’t get enough attention: these physical constraints are real limits on how fast AI can develop. All the predictions about AI transformation in the next few years assume we can build the infrastructure to support it. But we can’t connect to the power grid fast enough (4-7 year waits). Water is running out where facilities are being built. Supply chain shortages for transformers and circuit breakers mean money won’t speed things up. Clean energy projects sit in queues while fossil plants get built. And if the economics don’t work, communities are stuck with stranded assets.

You can’t download your way out of these problems. They’re not software challenges that clever algorithms can solve. So when you hear predictions about AGI arriving in 2027 or 2028, ask: where’s the power coming from? Who’s building it? How much will it cost communities? What happens if it doesn’t work out? Those aren’t anti-AI questions—they’re practical questions about whether the physical world can support the AI future being promised.

D. What This Means for You

If you live near a proposed or existing data center, three things will tell you whether your community is getting a fair deal:

Can you see the numbers? If environmental impact data isn’t public, that’s a red flag.

Who’s paying for grid upgrades? Check utility rate cases in your state—the costs might already be on your bill.

Are there real community benefits? Count actual local jobs created (not promised), compare to tax breaks given, and track whether infrastructure costs are being socialized.

The data center boom is real. The question is whether it’s sustainable—economically, environmentally, and politically. We’re about to find out.

In summary, the cloud isn’t a cloud. It’s buildings on the end of wires and pipes, competing with your home for the same power and water. We’re building the physical infrastructure for the AI future at breakneck speed, with almost no transparency about who pays or what happens if the boom doesn’t deliver. The technology exists to do this sustainably (or at least, more sustainably), but the incentive structure rewards grabbing power fastest—leaving communities and the environment holding the bag. Without policy intervention requiring transparency, accountability, and strategic coordination, we’re setting up both an environmental disaster and a potential multi-trillion-dollar stranded asset crisis. If we’re serious about AI’s potential benefits, we need to be equally serious about these real-world limits.

I'm glad more people are educating others and spreading the word about datacenters! I really believe that there are ways to make these more environmentally sustainable!

Thanks, Natalia.

Wow ~ a cogent, thorough and very readable summation. Thank you for enabling us with this information 👌